Observing the data

For a long time, I've strongly held the opinion that the right way to analyse data is by observing it, rather than following a set of procedures. We will bring the same ethos to Babbage Insight

This is a sanitised version of an internal note I wrote earlier this week, modified for public consumption.

Two schools of data analysis

The classic way of getting insights from data involves what can simply be called “observing data”. It is useful to have some kind of a visual tool for this - MS Excel is great in case your data is not too large. Else you might want to use a Notebook, such as Quarto or Jupyter (I strongly prefer the former).

The basic idea is that when you “observe data”, you get insights that you wouldn’t normally get if you were to analyse it in a procedural or algorithmic manner. You notice quirks in the data that summary statistics might miss. You form hypotheses about how the data might have been collected, and that can drive your analysis. You notice patterns that might escape math models. By simply visually inspecting the data, you will be able to get strong insights in terms of how to analyse it.

Modern data science, however, has unfortunately done away with this practice of “observing data”. As the industry has scaled rapidly, there has been a need for Fighterization, and consequently a process to process the data. You ask any young data scientist (in their twenties) to inspect some data, and what you will see is a wholly procedural way of doing it - first look for data formatting, then for missing values, then look for outliers, and so on. They even have a term for it - they call it “doing EDA” (a phrase I’ve come to loathe)..

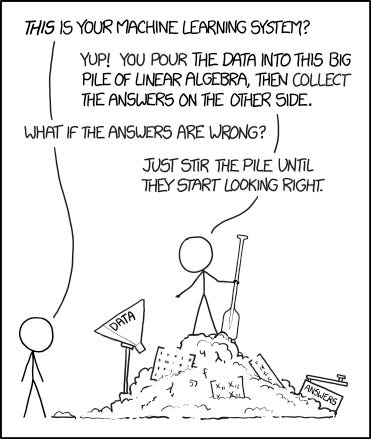

A couple of months ago, I joined a WhatsApp group of IITM Entrepreneurs. In that I mentioned that I’m building something using AI, and an old colleague DMd me saying he was “surprised that I was doing something using AI, since I had always been anti-AI” (referring to my battles in favour of observing the data rather than stirring the pile).

In that sense, “observing the data” is what has defined me in terms of my career as a data scientist (early readers of this newsletter might remember this as one of the main things I used to talk about here). And that has been my competitive advantage compared to most vanilla data scientists.

What this means for our product

Recently, I came across this tweet:

At that point of time, I had responded with this. I wasn’t sure when I tweeted it then, but I’m sure now.

The more I think of it, “observing the data” is going to be absolutely core to the core of Babbage Insight. If I am building a system that is going to unearth insights into the data, it ought to be capable of observing the data. It needs to observe and internalise what is happening to the data, and when new data arrives, use this “understanding of the data” to find Exceptional Insights.

In some ways, this is precisely what my experience of the last dozen years points to, and this is what has set me apart as a data scientist. Until now, with the available technology, it hadn’t been possible to build a system that could “observe the data and get a feel for it” (I’d first sketched a cartoon of what is now Babbage Insight way back in 2014). Now, with the existence of LLMs, this is no longer the case. Babbage Insight’s products will be able to observe the data, and “understand” it, and then apply this understanding to any new data.

A loose, but not incorrect, analogy would be to compare the approaches of “ordinary, brute force” chess playing engines such as Stockfish, and how they got upended by the reinforcement learning based “creative” engines such as AlphaZero.

Babbage Insight is going to be more AlphaZero and less of the brute force based Stockfish, adapted to the LLM era. That said, the “stockfish” of what we are looking to do also doesn’t exist, and it is possible that we might also end up building that along the way.

100%. And going a step further, observe the actual source of the data at the most fundamental level possible to know how to make sense of the data.

Because the principle here is that the more layers of abstraction you apply, the more information you lose.

Physical process > data about physical process > summary of that data > etc...