Pilots and copilots

A product that is a "copilot" assumes that its users have the ability to be "pilots".

Out on my personal blog, a couple of weeks ago, I wrote about my first ever experience with vibe coding. It had nothing to do with work (and hence went on that blog).

it is a long post full of bullet points, and so I didn’t get an opportunity to expand on my key “insight” from there, which is:

For you to use a copilot, you need to fundamentally have the skills to be a pilot in the first place

And that is surely relevant to work stuff, and so this post is on this blog.

Copilot begins

It all started with Github. Relatively early on in the period when LLMs have been ubiquitous, they released the Github Copilot to help people code better (ChatGPT says this was made generally available in June 2022 - I certainly heard about it a few months later). A year and a bit later, by the time we started Babbage Insight, copilots were all over the place.

People, especially potential investors, would assume that we were building a “copilot for data analytics”. A lot of them still do. In fact, a lot of our branding / communication (not sure if any of it has stuck) since we started has been that “we are NOT a copilot”. I’ve written about this before.

Why we are NOT building an analyst copilot

I first wrote this on LinkedIn last week. Copying it here for preservation and search-ability.

A couple of months ago, in San Francisco, I met an old friend who is a Venture Capitalist. He told me, “I don’t know why you are going with an anti copilot messaging. Among VCs, copilots are hot now”.

Aviation to software

I’ll defer to ChatGPT again (I guess I’m using it as a “copilot” for this article?):

"Copilot" originally refers to the second pilot in an aircraft, responsible for assisting the captain in flying the plane. It's been used in aviation since at least the early 20th century.

The metaphor has since been adopted widely in tech to imply human-in-the-loop support: something that helps a human operator but doesn't take over completely.

(emphasis is ChatGPT’s)

And then later it says

"Copilot" now typically refers to:

An AI assistant embedded into a specific workflow

Enhances rather than replaces the human

Often context-aware, using code, documents, or previous activity as inputs

There is a very clear meaning of the word “copilot” here, both in the aviation and github context - it assumes the presence of a pilot.

About Analytics Copilots

Now let us think about this in the context of data analytics. What does “analytics copilot” (a phrase we see thrown around pretty much everywhere) really mean?

An analytics copilot is an entity that is capable of assisting an analyst but not capable of performing data analysis by itself.

In order to use an analytics copilot effectively, you use it to assist an already skilled analyst. The analyst outsources certain tasks to this copilot, while owning the end to end analysis by herself.

Here are some example tasks that an analytics copilot can do:

Take instructions in natural language and produce a visualisation from the data

Takes instructions to extract data from given sources, and then extracts it (basically writes code to do so - LLMs can’t do these tasks natively) and possibly cleans it

Takes data requests in natural language, converts them to SQL statements and then runs those statements to fetch the data. It’s also possible, given instructions, that the copilot can then analyse the thus fetched data, generates insights from it and conveys that to the user (perhaps using visuals).

That it is a copilot means that it works under the assumption that the user is a “pilot”, and knows what she is doing. This means that the intended user of an analytics copilot is the analytics team.

Wrongly targeted copilots

Like my VC friend in SF told me, copilots are possibly “hot” in VC circles right now. And so even people who are not building copilots are claiming that they are. And this leads to product-marketing misalignment.

On the same SF trip, at a meetup, I met a former entrepreneur (now a VC) who had build and shut down an analytics copilot company. One of the reasons his company didn’t take off, he said, was that it ended up increasing the work of analysts at his customer companies.

It was a copilot but targeted at business users. Being an LLM, there was some hallucination, and so the business users would frequently ask their analytics counterparts to verify the output of the LLM. And so, rather than the analytics team’s work going down, it actually went up. And so POCs wouldn’t convert.

If you are calling something a copilot, it means that it ought to be used by someone who is capable of doing the same work by themselves.

So an analytics tools that takes natural language to fetch and analyse data that is targeted at business users is NOT a copilot.

Turning copilots into pilots

If you are building an analytics tool that is to be used by business users, it actually needs to be an analytics “pilot”. That means it should be able to handle end to end tasks that are currently performed by analytics teams. What do you need to do to turn copilots into pilots?

Here are a couple of random thoughts (this is by no means an exhaustive list):

you might be tired of hearing this from me, but you need to up your verification game. LLMs hallucinate (and that is not always a bad thing - hallucination is the bedrock of creativity). You need to take steps to verify what it says and does. Basically take steps so that the business user will trust the output

At some level, for data, this is easy - you can actually analyse the data to verify if the output is correct. You will likely use an LLM to perform this verification as well, but it is better than nothingmake sure the interface and language are crafted such that a business user will find it easy to work with this “analytics pilot”. An unrelated example here is some presentation to a client’s CEO I had seen from his internal analytics team - it had things like p values and AUC scores - stuff that CEOs are likely to ignore.

take a small specific problem and solve it. the more generic you make your product, the more ways in which things can go wrong, and the greater the chance that something will go wrong. So use a highly circumscribed use case, and you should be good.

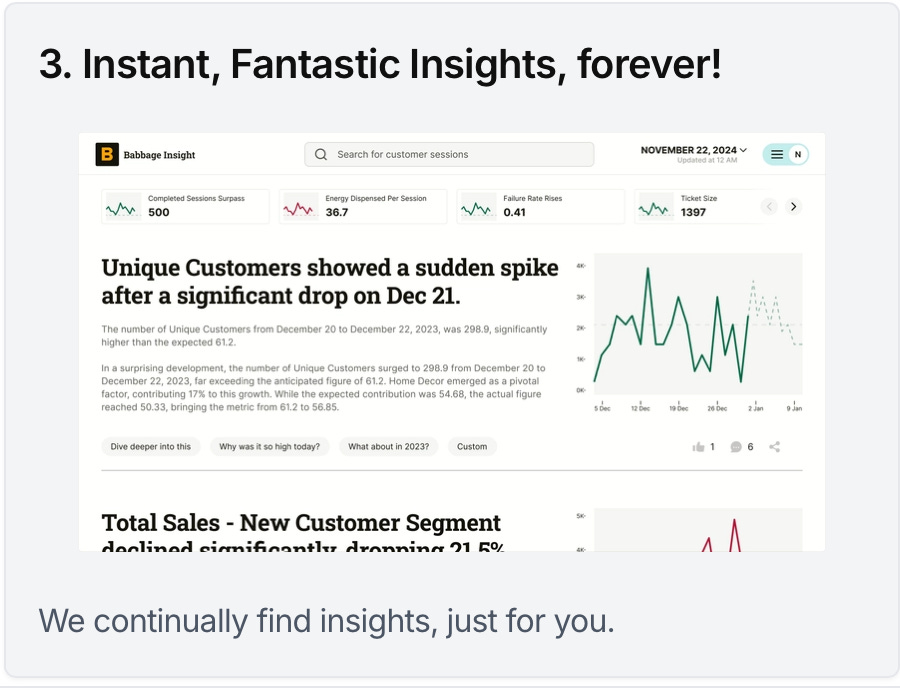

Our new website

All this talk of building “pilots” makes this a good time for me to plug to you Babbage Insight’s new website, which I think is pretty cool (and I didn’t make it). Looking at it, you will know how we are building an analytics “pilot”.

We have taken a highly circumscribed problem (tell us your key metrics, and we will investigate them (and related metrics), and give you a “newspaper” of what is happening).

We have a “newspaper” output format, heavy in visuals and natural language, so executives can run their business rather than getting a second degree in data interpretation.

All components of our product use a generator-verifier framework in order to cut through the hallucination, and instead leverage the LLMs’ creativity.

Now, hopefully, I don’t need to explain any more on why (and how) we are not building a copilot!

Very informative!!